The industry needs an automatic, optimizing compiler that targets artificial intelligence (AI)-specific hardware. Today there are many solution stacks that only offer one or two of these three properties–automatic, optimized results, on the right hardware target.

At Groq, we obsess over developer velocity: the idea that you should get from where you are to your goal as fast as possible. We can think of this as Time-to-Results and Time-to-Production. In this context, developer velocity means getting your AI workload from functional to production-optimized in a way that is as fast and–dare we say it–fun, as possible.

The AI space has some amazing productivity tools. Any developer can just install the Huggingface Transformers library and get a novel natural language processing model running on a GPU in minutes. The problem we hear from our customers is that productivity stops before their inference performance goals are met. In other words, developer velocity starts out fast, but velocity for the entire journey, from idea to production can be slow. This is especially true the more you innovate and go off the beaten path of mainstream models.

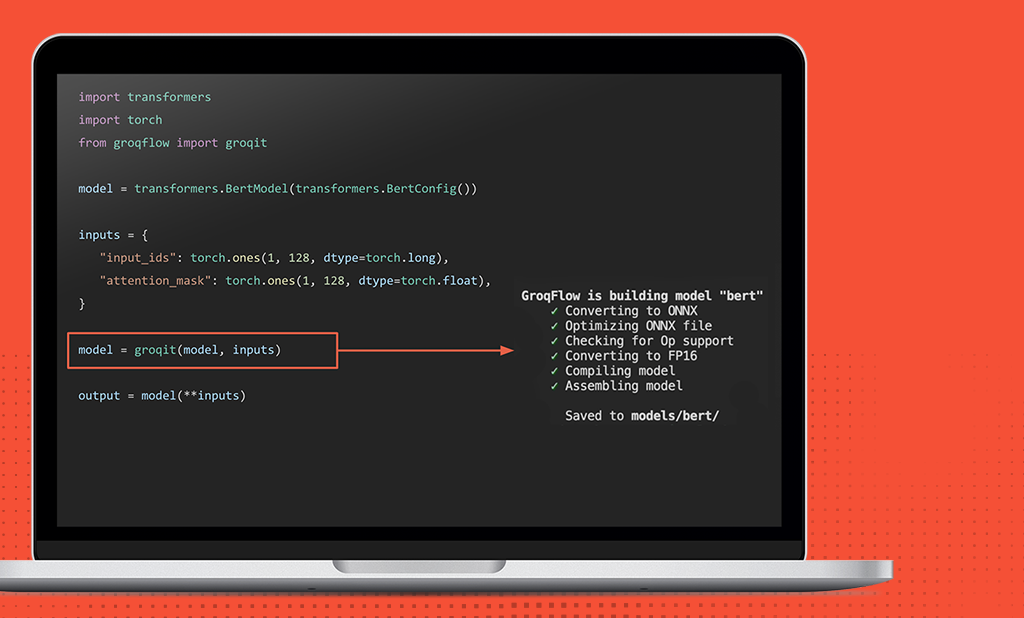

That’s why we’re introducing GroqFlow™, a simple interface for getting your models running on GroqNode™ servers by adding one line of code.

It is going to be a journey to get GroqFlow ready for the broad spectrum of deep learning frameworks and deployment scenarios that exist today. At Groq though, we believe in sharing rough drafts early and we want you to come on the journey with us. That is why the first release of GroqFlow will be publicly available on GitHub. You’ll be able to see what we’re doing, where we’re going, and more importantly, see a variety of example programs that demonstrate ease-of-use and high developer velocity.

In the early stages of the project, GroqFlow will enable Groq customers to:

- Build supported PyTorch models into GroqChip™ programs and run them on GroqNode systems by adding one line of code

- Copy examples from Huggingface and get them running on GroqNode servers in under 10 minutes

- Rapidly explore new use cases for Groq solutions, similar to what we shared in our Year of the Compiler blog post.

The goal is to show you our vision for accelerating developer velocity and get your feedback as we begin our GroqFlow journey. So far, the reactions from customer previews of GroqFlow have been electric. We’ve heard things like:

- “This is glorious. We need this as soon as possible.” – Science and engineering research customer

- “This will make it much faster to get models up and running.” – Cybersecurity customer

We can’t wait to share this tool with you too, and show you developer velocity you can’t unsee. If you’re interested in a hands-on workshop with GroqFlow development on a GroqNode server, please contact [email protected].

Blog Contributors: Jeremy Fowers, Victoria Godsoe, Daniel Holanda Noronha, and Ramakrishnan Sivakumar