GroqRack™ Compute Cluster

Take your own cloud or AI Compute Center to the next level with on-prem deployments. Groq LPU™ AI inference technology is available in various interconnected rack configurations to meet the needs of your preferred model sizes.

Groq On-prem Deployment Advantages

- Plug & Play Data Center Deployment

With no exotic cooling or power requirements, deploying Groq systems requires no major overhaul to your existing data center infrastructure.

- Lower Costs & Footprint

With routing built into the LPU, your dollar and data center space go towards what matters – compute – instead of the high overhead costs of networking infrastructure.

- Available North American-based Supply

Groq currently has available supply, ready to get you up and running quickly with LPU AI inference technology that is designed and manufactured in North America.

The Groq LPU Building Blocks

The Groq LPU is a scalable software and hardware solution, with our compiler defining the LPU architecture, which is the basis of our systems. While Groq does not sell individual nodes, cards, or chips, our customers can access the LPU via GroqCloud platform and our on-prem solutions. Learn more about each system component below.

Featuring eight compute plus one redundant GroqNode™ servers, GroqRack cluster provides an extensible deterministic LPU network with with an end-to-end latency of only 1.6µs for a single rack.

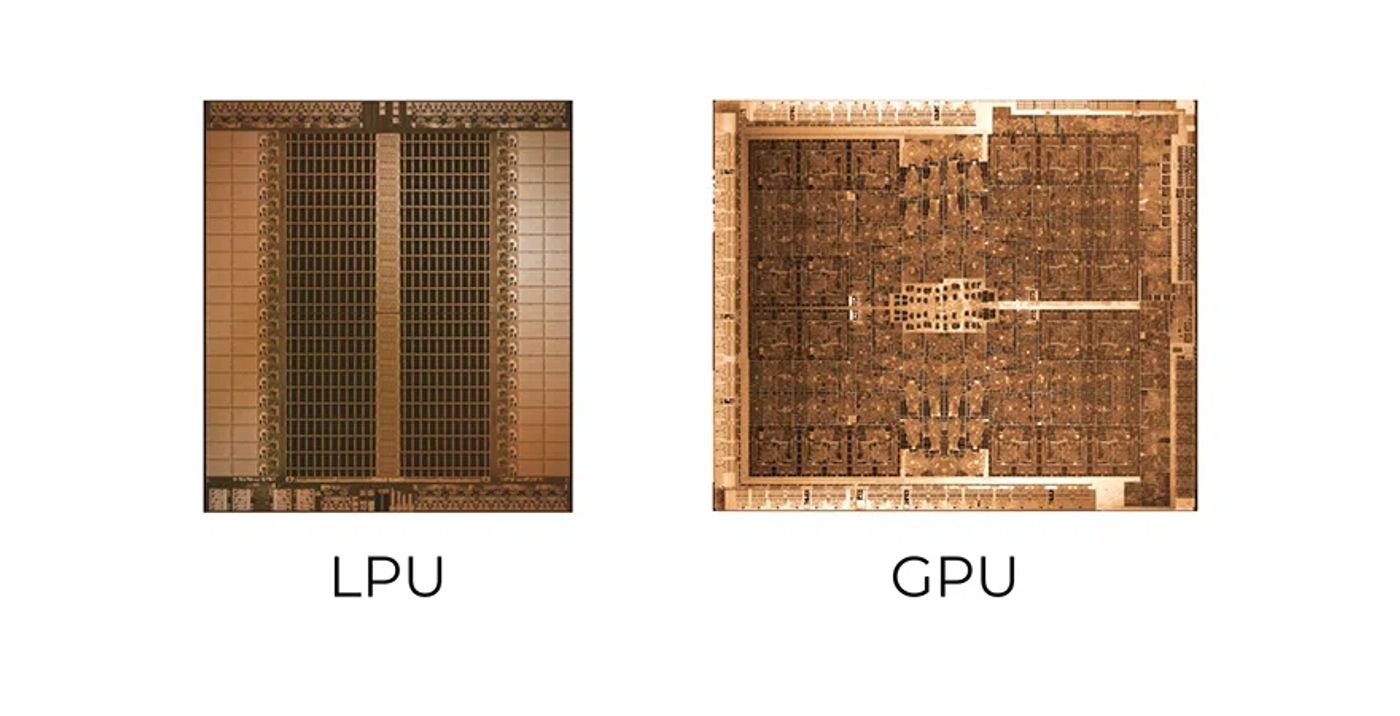

How the LPU Is Different Than the GPU

Groq LPU AI inference technology is fundamentally different from a GPU, which was originally designed for graphics processing.

- Groq Compiler is in control, and not secondary to hardware.

- Compute and memory are co-located on the chip, eliminating resource bottlenecks.

- Kernel-less compiler makes it easy and fast to compile new models.

- No caches and switches means seamless scalability.

Leading GenAI Models

Take advantage of fast AI inference performance for leading GenAI models across text, audio, and vision modalities from providers like Meta, DeepSeek, Qwen, Mistral, Google, OpenAI, and more.

Connect With Our Team

We’d love to hear about your use case and discuss an on-prem deployment solution that meets your needs.