Mistral Saba Added to GroqCloud™ Model Suite

GroqCloud™ has added another openly-available model to our suite – Mistral Saba. Mistral Saba is Mistral AI’s first specialized regional language model, custom-trained to serve specific geographies, markets, and customers. This model is hosted from Saudi Arabia, where Groq deployed its LPU-based AI inference infrastructure to build the region’s largest inference cluster in December 2024. Brought online in just eight days, the rapid installation established a critical AI hub to serve surging compute demands globally and hosting Mistral Saba there will help meet demand for specialized regional language models.

All GroqCloud users can access this model, from our Free Tier users to our Developer and Enterprise Tier customers, by logging into GroqCloud Console. Developers can make API calls to the newly-hosted by using the following model ID: Mistral-Saba-24b. For information on pricing, click here.

Performance

Mistral Saba is a 24B parameter model with a 32k context window.

GroqCloud is currently running Mistral Saba at 330 tokens per second. Stay tuned for official 3rd party benchmarks from Artificial Analysis.

Mistral Saba Advantages

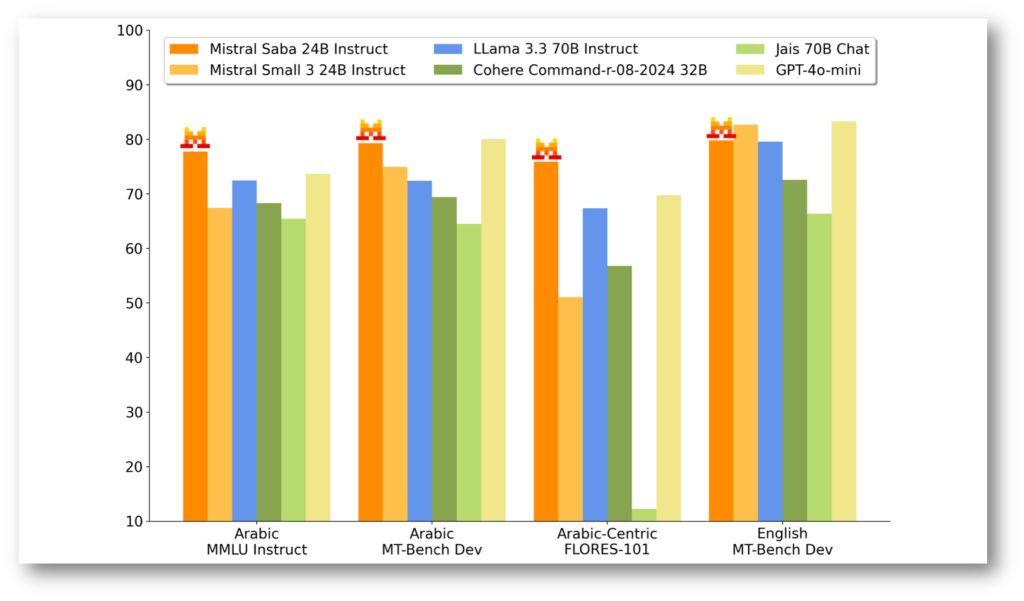

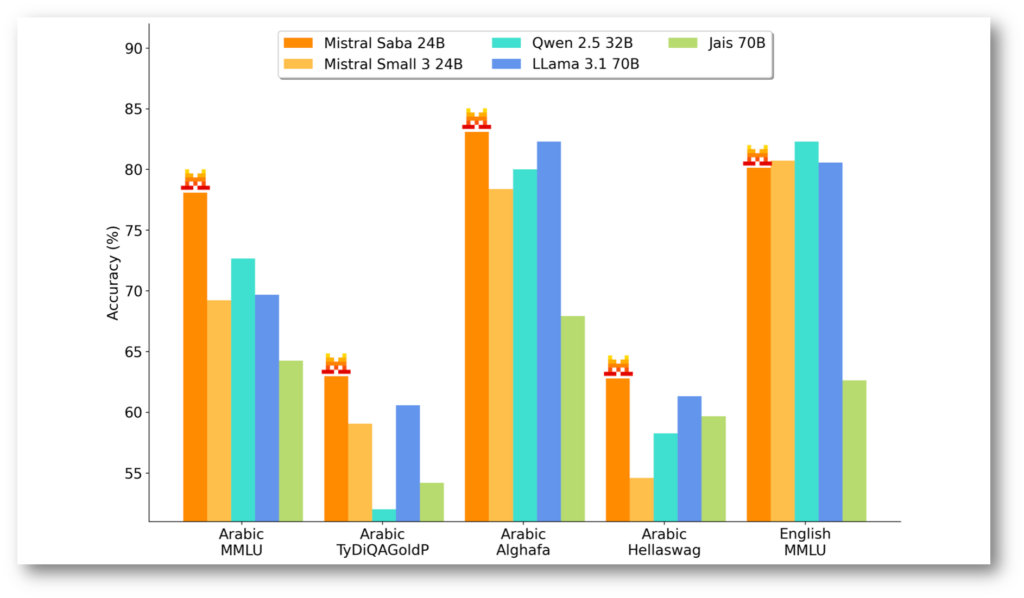

Mistral Saba was trained on meticulously curated datasets from across the Middle East and South Asia. The model provides more accurate and relevant responses than models that are over five times its size, while being significantly faster and lower cost. The model can also serve as a strong base to train highly specific regional adaptations.

In keeping with the rich cultural cross-pollination between the Middle East and South Asia, Mistral Saba supports Arabic and many Indian-origin languages, and is particularly strong in South Indian-origin languages such as Tamil. This capability enhances its versatility in multinational use across these interconnected regions. Learn more here.

Mistral Saba + Groq Fast AI Inference

Making AI accessible to all means offering tools that address every culture and language. Now more than ever, larger general-purpose models that are proficient in multiple languages won’t cut it for use cases that require linguistic nuances, cultural background, and in-depth regional knowledge. The demand for models that are not just fluent but native to regional context is here, and Mistral AI is meeting the call with Mistral Saba, their first specialized regional language model.

The model is ideal for:

- Conversational Support: Mistral Saba offers swift and precise Arabic responses. This can be used to power virtual assistants that engage users in natural, real-time conversations in Arabic, enhancing user interactions across various platforms.

- Domain-Specific Expertise: Through fine-tuning, Mistral Saba can become a specialized expert in various industries, offering insights and accurate responses all within the context of Arabic language and culture.

- Cultural Content Creation: Mistral Saba excels in generating culturally relevant content like educational resources. By understanding local idioms and cultural references, it can help businesses and organizations create authentic and engaging content that resonates with Middle Eastern audiences.

With Mistral Saba quickly becoming a powerful solution that respects and understands nuances in the Middle East, combined with the speed of Groq AI inference infrastructure and the scalability GroqCloud offers , the use cases are limitless.

Build Fast

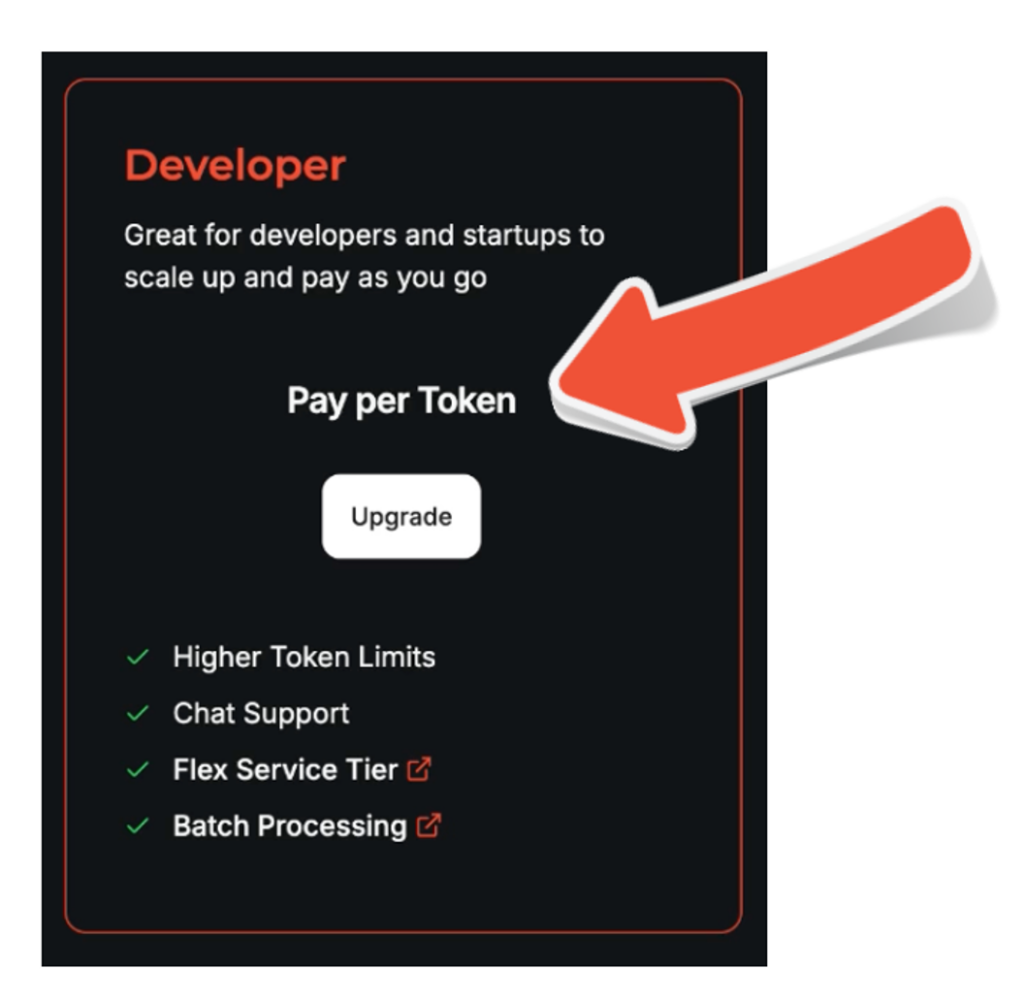

We’ve expanded access to GroqCloud™ with our newly launched Developer Tier – a self-serve access point for higher rate limits and pay-as-you-go on-demand access. You can learn more in our blog here or sign up today.

If you’re looking to take your access to the next level, our enterprise solutions are perfect for custom solutions and large scale needs requiring enterprise-grade capacity and dedicated support. Reach out to our team here about Enterprise access.