LLMs Inside the Product: A Practical Field Guide

Building with LLMs has taught me one clear lesson: the best AI feature is often invisible.

When it works, the user doesn’t stop to think “that was AI.” They just click a button, get an answer quickly, and move on with their task.

When it doesn’t work, you notice right away: the spinner takes too long, or the answer sounds confident but is not true. I’ve hit both of these walls many times. And each time, the fix was less about “smarter AI” and more about careful engineering choices. Use only the context you need. Ask for structured output. Keep randomness low when accuracy is important. Allow the system to say “I do not know.”

This guide is not about big research ideas. It’s about practical steps any engineer can follow to bring open-source LLMs inside real products. Think of it as a field guide. Think of it as a field guide with simple patterns, copy-ready code, and habits that make AI features feel reliable, calm, and fast.

How It Works — The Four‑Step Loop

Every reliable AI feature follows the same loop. Keep it consistent. Boring is good.

1) Read

What: Take the user input and only the smallest slice of app context you need. More context means higher cost, slower responses, and more room for the model to drift.

Examples

- Support — “Where is my order?” → pass the user ID and the last order summary, not the entire order history.

- Extraction — “Pull names and dates from this email thread” → pass the thread text only, not unrelated attachments.

- Search — “Find refund policy” → pass top three snippets from your docs, not the whole knowledge base.

2) Constrain

What: Set rules so the model stays within your desired constraints.

Do this

- System prompt as a contract

- State what the assistant is and is not

- Require valid JSON that matches a schema

- If there is missing information, ask the user for a short follow‑up or answer “I don’t know.”

- Keep privacy rules explicit (do not log sensitive data)

- Version your prompts and test them

- Match temperature to the task (there is no one setting that fits all)

- Low (≈0.0–0.2): Extraction, classification, validation, RAG answers with citations, reliable tool choice

- Medium: Templated drafts and light tone variation

- High: Brainstorming and creative copy where variety matters

Keep context tight in all cases. If your stack supports it, use a seed in tests for repeatability.

3) Act

What: Aim to produce LLM-generated outputs that can be used as inputs in the next part of your workflow without further processing required.

When to use what:

- Structured Outputs when the next step is programmatic, e.g., for updating UI, storing fields, and running validation.

- Why: Such outputs are ready to be used as inputs in the next step of a workflow or application as it's structured, parsable data that needs no further manual processing.

- Example: Extract {name, date, amount} from an invoice.

Code: Structured Outputs with Pydantic

1from groq import Groq

2

3from pydantic import BaseModel

4

5from typing import Literal

6

7import json

8

9client = Groq()

10

11class ProductReview(BaseModel):

12

13product_name: str

14

15rating: float

16

17sentiment: Literal["positive", "negative", "neutral"]

18

19key_features: list[str]

20

21response = client.chat.completions.create(

22

23model="moonshotai/kimi-k2-instruct",

24

25messages=[

26

27{"role": "system", "content": "Extract product review information from the text."},

28

29{

30

31"role": "user",

32

33"content": "I bought the UltraSound Headphones last week and I'm really impressed! The noise cancellation is amazing and the battery lasts all day. Sound quality is crisp and clear. I'd give it 4.5 out of 5 stars.",

34

35},

36

37],

38

39response_format={

40

41"type": "json_schema",

42

43"json_schema": {

44

45"name": "product_review",

46

47"schema": ProductReview.model_json_schema()

48

49}

50

51}

52

53)

54

55review = ProductReview.model_validate(json.loads(response.choices[0].message.content))

56

57print(json.dumps(review.model_dump(), indent=2))Learn more: Structured outputs docs

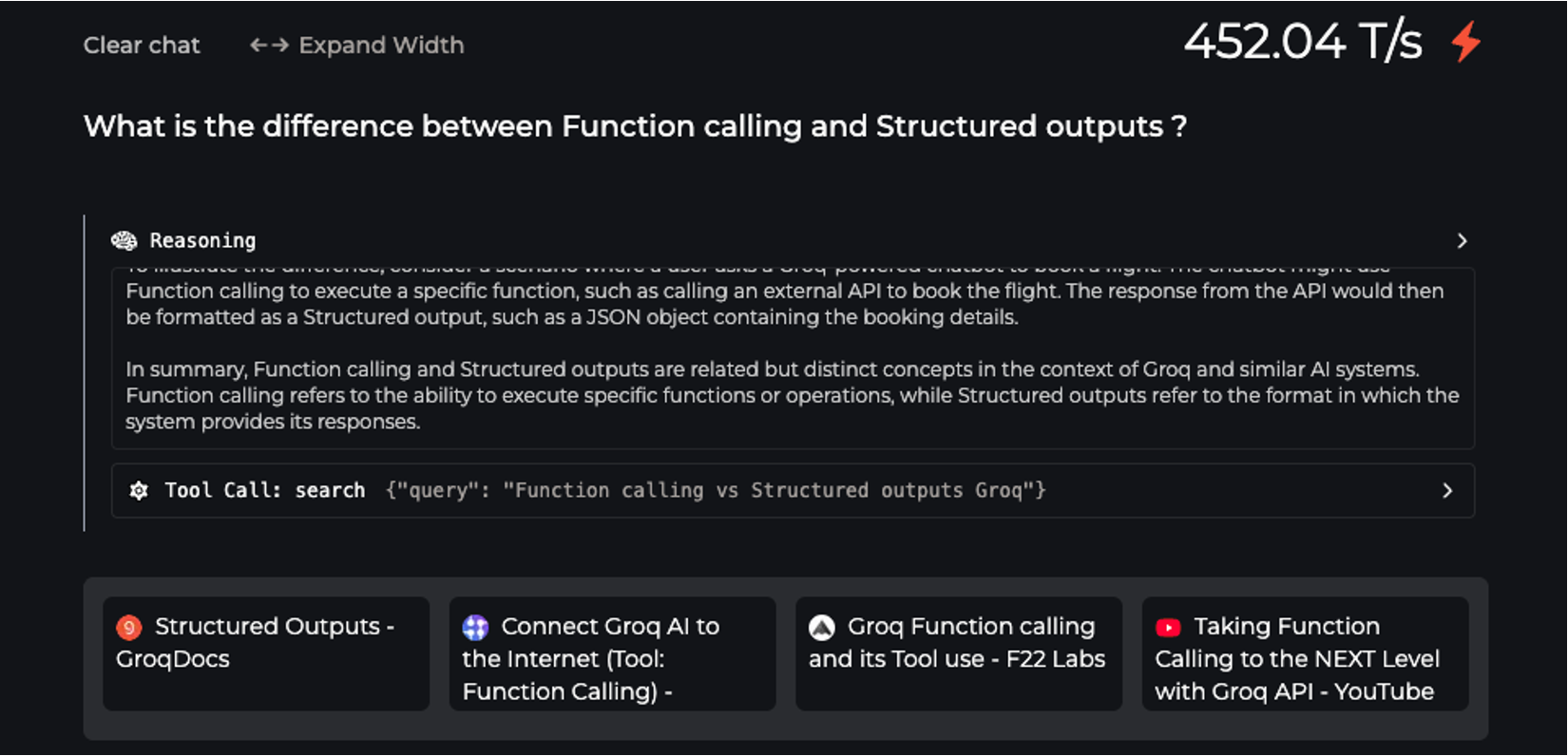

- Function calls (tools) when the model needs live data or to trigger an action that your code controls such as search, fetch, compute, notify, or connect to external systems.

- Why: Tools let the model use fresh information instead of relying only on what it learned during training. That means it can query a database, call an API, or look up the latest records without inventing answers. The model proposes the action, your code decides whether to run it, and you keep a clear audit trail.

- Example: The model calls search_docs() to find relevant text, then render_chart() to create a visualization, and finally explains the result back to the user.

- Plain text when the result is narrative only, such as a summary or a short answer.

- Why: Simplest path when nothing else needs to consume the output.

Code: function calling (tools)

1import json

2from groq import Groq

3import os

4

5# Initialize Groq client

6client = Groq()

7model = "llama-3.3-70b-versatile"

8

9# Define weather tools

10def get_temperature(location: str):

11 # This is a mock tool/function. In a real scenario, you would call a weather API.

12 temperatures = {"New York": "22°C", "London": "18°C", "Tokyo": "26°C", "Sydney": "20°C"}

13 return temperatures.get(location, "Temperature data not available")

14

15def get_weather_condition(location: str):

16 # This is a mock tool/function. In a real scenario, you would call a weather API.

17 conditions = {"New York": "Sunny", "London": "Rainy", "Tokyo": "Cloudy", "Sydney": "Clear"}

18 return conditions.get(location, "Weather condition data not available")

19

20# Define system messages and tools

21messages = [

22 {"role": "system", "content": "You are a helpful weather assistant."},

23 {"role": "user", "content": "What's the weather and temperature like in New York and London? Respond with one sentence for each city. Use tools to get the information."},

24]

25

26tools = [

27 {

28 "type": "function",

29 "function": {

30 "name": "get_temperature",

31 "description": "Get the temperature for a given location",

32 "parameters": {

33 "type": "object",

34 "properties": {

35 "location": {

36 "type": "string",

37 "description": "The name of the city",

38 }

39 },

40 "required": ["location"],

41 },

42 },

43 },

44 {

45 "type": "function",

46 "function": {

47 "name": "get_weather_condition",

48 "description": "Get the weather condition for a given location",

49 "parameters": {

50 "type": "object",

51 "properties": {

52 "location": {

53 "type": "string",

54 "description": "The name of the city",

55 }

56 },

57 "required": ["location"],

58 },

59 },

60 }

61]

62

63# Make the initial request

64response = client.chat.completions.create(

65 model=model, messages=messages, tools=tools, tool_choice="auto", max_completion_tokens=4096, temperature=0.5

66)

67

68response_message = response.choices[0].message

69tool_calls = response_message.tool_calls

70

71# Process tool calls

72messages.append(response_message)

73

74available_functions = {

75 "get_temperature": get_temperature,

76 "get_weather_condition": get_weather_condition,

77}

78

79for tool_call in tool_calls:

80 function_name = tool_call.function.name

81 function_to_call = available_functions[function_name]

82 function_args = json.loads(tool_call.function.arguments)

83 function_response = function_to_call(**function_args)

84

85 messages.append(

86 {

87 "role": "tool",

88 "content": str(function_response),

89 "tool_call_id": tool_call.id,

90 }

91 )

92

93# Make the final request with tool call results

94final_response = client.chat.completions.create(

95 model=model, messages=messages, tools=tools, tool_choice="auto", max_completion_tokens=4096

96)

97

98print(final_response.choices[0].message.content)Learn more: Tool use docs

4) Explain

What: Show the user the steps, tools, and citations so they have more confidence in your app's AI-generated outputs.

Examples

- Append a short “What I used” note with source titles or IDs. The following Compound model is showing its answer with sources attached for clarity and trust. Try it here.

- In extraction, show a small preview of the matched text.

- In tool flows, show which tools ran and in what order, then keep the logs server side.

Core patterns you’ll reuse

Domain‑Specific Language (DSL): A small language designed for a specific domain. In apps, this often means search filters, a sandboxed SQL query, a chart spec, or an email template.

| Pattern | What it Means | Example Request | Typical Output to Your App |

|---|---|---|---|

Router | Classify and route to the right handler or model | “Is this billing or technical?” | {category: "billing"} |

Extractor | Turn messy text into clean fields | “Grab names and dates from this email” | {names: [...], dates: [...]} |

Translator | Convert intent to a safe DSL | “Show paid invoices this month per region” | Filters or SQL for a sandbox, or chart spec |

Summarizer | Shorten or re-tone text | “Summarize the meeting for a new hire” | Short bullet list with optional citations |

With Tools | Model proposes actions; app executes | “Search policy, then draft the reply” | Tool calls → tool results → short answer |

Orchestrator | Chain steps while the app keeps control | “Verify doc, extract fields, request missing” | Plan → tool calls → JSON result + next steps |

Shipping Safely: Tests, Monitoring, and Fallbacks

Before launch:

- Write prompt unit tests that check the output format you expect. For JSON, assert required fields. For plain text, check for keywords, structure, style, or refusal phrases.

- Build a small eval set from real questions. Include expected outcomes and allowed refusals.

- Run in shadow mode or behind a feature flag and log everything.

What to track in production:

- Latency p50 and p95

- Tokens in and out

- Model and prompt versions

- Tool call success and failure

- Invalid JSON rate

- Refusal rate

- User edit rate (compare model output to final user text)

- Citation correctness (check answer against cited sources)

You can monitor these signals in the Groq Console dashboard, which gives you logs, metrics, usage, and batch insights to see how your AI features behave in real workloads.

Fallbacks that work

- If the task is unanswerable, return “I do not know” with a next step.

- If results look long or slow, stream partial results and keep the UI responsive.

- Use small-then-big model routing where it matters, start with a smaller, faster, and cheaper model for most requests. If the output is incomplete, uncertain, or flagged as too complex, escalate the same request to a larger model. This way you save cost and latency on routine tasks, while still handling difficult edge cases with more power.

Common Pitfalls and Quick Fixes

- Too much context → Fetch only what you need and re‑rank.

- Letting the model touch prod data directly → Always use tools and a safe layer.

- Using chat for everything → Many jobs are better as a simple extractor or router.

- Verbose answers driving cost → Prefer concise styles and structured fields.

- No versioning → Store prompt IDs and model versions in every log line.

A Short Checklist You Can Use Today

- [ ] Write a clear system prompt and a strict JSON schema.

- [ ] Choose temperature for the task and keep context tight.

- [ ] Enforce JSON validation before UI or DB updates.

- [ ] Add one tool, log every call, and review failures weekly.

- [ ] Track latency, tokens, prompt and model versions, refusals, and invalid JSON.

- [ ] Launch with a feature flag and a simple fallback plan.

Don’t Forget

Boring AI features are reliable AI features that feel invisible to users - they just work. Read only what you need. Constrain with clear rules. Act with structured outputs and safe tools. Explain what happened. Start with the smallest useful feature. Use the patterns that fit your use case. Monitor everything. Improve based on real user behavior, not theoretical performance metrics. The goal isn’t to build impressive AI demos. It’s to ship features that users depend on every day.