Advancing the American AI Stack

Introduction

Power has always flowed from the control of the world's essential resources. Once it was steel, then oil, then data. Today, it is AI compute, and specifically, the ability to run AI systems efficiently at global scale. Whoever controls AI compute will shape the century ahead.

Compute is fast becoming the foundation of global economic growth. In the United States, investment in AI infrastructure—from data centers to semiconductors and energy systems—is already moving the needle: J.P. Morgan estimates that data-center spending alone could boost U.S. GDP by up to 20 basis points over the next two yearsFootnote 1. According to The Economist, investments tied to AI now account for 40 percent of America's GDP growth over the past year, equal to the amount contributed by consumer spending growth. That statistic would be staggering regardless of how long AI has been part of the economy, but this is just the start.

The next decade of global competition will be defined not only by who invents the most powerful AI systems, but also by who can deploy and operate them securely, efficiently, and at scale. The real battleground increasingly centers on inference, the computational power required to run trained AI models and deliver real-time results to billions of users worldwide.

While training compute builds AI capabilities, inference compute delivers them. As AI applications move from laboratory to deployment, inferenceFootnote 2 becomes the bottleneck that determines which nations can actually operationalize artificial intelligence at global scale. This is how the industry operates today and can serve as the model that informs U.S. export policy.

The question facing policymakers is whether to recognize and enable the existing model of a vibrant American AI ecosystem, or to construct something entirely new. The evidence suggests that a nuanced approach to the former will better serve American strategic interests.

Organizing an American AI Export Program

The Trump Administration's Executive Order on Promoting the Export of the American AI Technology Stack (EO) recognizes that our allies are hungry for American compute, and that the United States must dominate the "away game" before geopolitical rivals fill the vacuum.

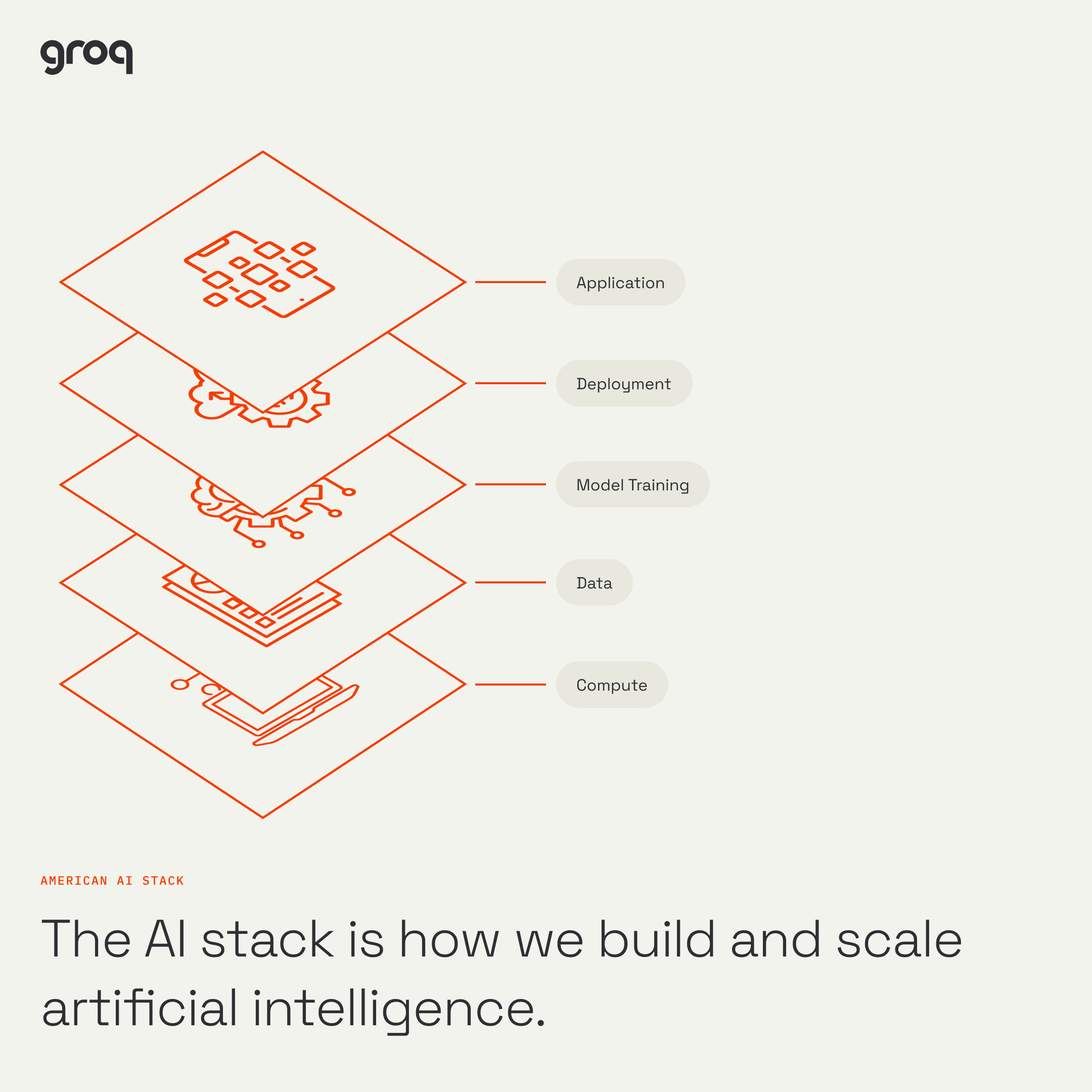

This EO represents a watershed moment in American technology policy. Previous administrations treated AI exports primarily through a defensive lens, focusing on what to restrict rather than what to enable. The Trump Administration has inverted that paradigm, recognizing that American AI leadership depends not just on preventing adversaries from acquiring our technology, but on ensuring allies adopt democratically-aligned systems, standards, and operational models before alternatives take root. The stack in the EO and Groq’s definition of the "American AI Stack"—five discrete layers spanning hardware, data, models, orchestration, and applications—differ in how they designate each layer, but they both recognize that competitive advantage in AI infrastructure comes not from any single component, but from how the layers integrate into deployable systems.

In real-world scenarios, how the compute systems and data architecture within the stack function and interact will be contingent on the stack’s overall structure and the opportunities it addresses. For example, each application may require a different selection and configuration of models, hardware, and deployment solutions. As such, the stack will remain a dynamic organism, its components interchangeable and working in concert, rather than a disjointed set of layers. Given the fundamental impact of this dynamism, an export program organized around rigid layer boundaries could inadvertently slow the cross-layer innovation that gives U.S. technology its competitive advantage over more centralized, state-directed competitors.

The Department of Commerce’s Request for Information has asked industry to weigh in on how best to structure an export framework, including whether consortia should play a central role. That question reflects a thoughtful approach.

A consortium model is one way to organize exports, especially for large, integrated projects that benefit from a single coordinating body. At the same time, the RFI leaves room for other structures, such as marketplace models, that allow trusted providers to contribute individual components under shared standards. Inviting industry input acknowledges that private-sector firms often understand integration requirements and technology lifecycles with greater specificity than regulators, and that choosing a structure that is too rigid could inadvertently slow the innovation that underpins America’s advantage in AI. We believe this openness is essential: the most effective framework will not rely on a single model, but on a multiplicity of coordinated options that promote both competition and expedience.

How the AI Market Already Works

Before examining policy options, it's worth understanding how the American AI market already balances competition with coordination, and the sophisticated marketplace dynamics that consistently shape the industry. The evidence suggests a clear pattern: companies form private consortia in various configurations to address varying needs and challenges, while also competing vigorously in an open marketplace for opportunities where they can play a different role.

Consider how inference infrastructure actually reaches global markets. Groq's October 2025 partnership with IBM illustrates the pattern: integrating Groq's GroqCloud inference platform with IBM's watsonx Orchestrate environment required extensive technical coordination on load balancing, model optimization, and enterprise security protocols. This is a privately formed consortium driven by customer requirements. IBM's healthcare and financial services clients need guaranteed interoperability between Groq's Language Processing Unit inference acceleration and IBM's orchestration layer. The partnership also integrates Red Hat's open-source vLLM technology with Groq's LPU architecture—another layer of technical coordination that happens because the market demands it, not because the government mandated itFootnote 3.

Similarly, Groq's deployments through partners like Dell Technologies demonstrate how hardware and infrastructure layers coordinate. Regional distributors such as Aljammaz Technologies in the Middle East combine Groq inference systems with Dell-manufactured servers and local operational expertiseFootnote 4. Each integration requires validated compatibility protocols for power management, cooling systems, and data throughput. These are operational consortia, formed organically to meet deployment requirements in specific markets.

The pattern repeats across the American AI ecosystem. NVIDIA's partnerships with cloud providers, OEMs, and system integrators show how the training side of the stack works together. Companies like Microsoft, MetaFootnote 5, and OracleFootnote 6 form technical consortia for large-scale training projects, coordinating on everything from liquid cooling infrastructure to high-speed networking fabrics while maintaining complete independence to pursue different partnerships for other initiatives. When OpenAI needs training capacity, it assembles the partners whose technologies integrate effectively for that specific deployment.

None of these partnerships required government coordination. They emerged because technical integration demanded it, and because companies saw commercial value in solving interoperability challenges together. The hardware, software, and service providers each maintain independent innovation paths while ensuring their offerings work together when deployed. This is the natural state of American AI innovation: fierce competition alongside pragmatic collaboration, in each case based on what best serves customers.

The Path Forward

This hybrid model—marketplace competition with industry-led, project-specific consortia—is how the American AI stack achieves both rapid innovation and reliable interoperability. The policy question before the Administration is not whether to create this model, but whether to recognize it and enable it in the export context.

In our view, the answer lies in understanding that both marketplace flexibility and consortium coordination play important roles. The most effective approach combines elements of each: a marketplace of pre-certified providers with the ability to form consortia where integration requirements demand it. This preserves competition and innovation while enabling the coordinated delivery that complex deployments require. It is not “marketplace versus consortia.” Rather, the optimal frame is a marketplace with consortia, formed by companies that have already done the technical integration work.

By contrast, consortium structures that pre-determine which companies can work together risk freezing the very dynamism that gives American AI its competitive edge. The recommendations below detail how to structure an export program that preserves this competitive dynamism while meeting security and interoperability requirements.

The State of American AI Leadership

The United States currently operates from a position of strength. While training compute—the power required to develop AI models—has grown by a factor of 4.2x per year since 2018, inference compute is expanding even faster as enterprises shift from experimentation to production deploymentFootnote 7. Inference now accounts for the majority of AI compute demand globally, and this trend will accelerate: by 2030, running AI systems at scale could require orders of magnitude more inference capacity than exists today. Training happens once to create a model. Inference happens every time that model is usedFootnote 8. As AI moves to production deployment, aggregate inference demand quickly dwarfs training requirements, making inference efficiency essential for sustainable, large-scale AI operations.

Over the last half-decade, the United States has developed approximately 70 percent of the world's leading AI models and, according to the U.S. Federal Reserve, "dominates" high-end AI training compute globally, controlling 74 percent of capacityFootnote 9. U.S. companies also lead in inference infrastructure, with American-designed systems powering the majority of deployed AI applications worldwide. These figures clearly establish the United States' current leadership position. However, that leadership faces an existential threat. The rapid acceleration of AI infrastructure development and investment globally, with particularly explosive growth in Asia-PacificFootnote 10, demonstrates that other nations recognize compute as the strategic resource of this century. If the United States cannot translate its current advantage into sustained market presence in allied nations, competitors will fill that space with their own technologies, standards, and operational models.

Patterns Shaping the American AI Stack

From our vantage point inside the AI infrastructure market, several patterns are becoming clear:

First, competition and openness across the stack continue to shape which technologies advance and which ones gain real adoption, particularly among allies that need systems they can integrate quickly. At the same time, this competitive innovation is driving a flourishing American AI ecosystem, which has knock-on effects for national priorities like American manufacturing, reindustrialization, and supply chain resilience. The diversity of approaches, from Groq's inference-optimized LPU architecture to NVIDIA's training-focused GPU platforms to emerging specialized accelerators, creates options that centralized, state-directed competitors cannot match.

Second, operational oversight of AI systems by U.S. companies and by trusted allied countries is gaining importance, not as a constraint, but as a practical way to manage risk as AI systems expand across borders. The ability to verify compliance, ensure security protocols are maintained, and respond to emerging threats depends on clear lines of operational authority. American operational oversight during critical early deployment phases provides assurance that systems are configured, maintained, and operated according to agreed security standards.

Third, the emerging American AI Stack is becoming a framework in its own right, influencing how partners think about standards, long-term reliability, and the pace of technological change. When nations choose American AI infrastructure, they are not just buying hardware, they're adopting architectural patterns, interoperability standards, and operational practices that shape their digital ecosystems for decades. This is the modern equivalent of setting railroad gauge standards or telecommunications protocols: the choices made now will determine whose systems can easily integrate with whose, and therefore whose influence grows.

Taken together, these observations are not merely descriptive; they point toward specific policy opportunities. Each pattern reflects how the market currently operates and how policy can either enable or constrain that operation. The pillars below translate these observations into concrete recommendations for program design, moving from general principles to specific implementation mechanisms.

A Roadmap for American AI Dominance

Building on the Administration's work, we propose an approach to exporting the American AI Stack that offers greater flexibility for allies and clearer pathways for domestic innovation, while staying firmly aligned with the Administration's objective: ensuring American AI dominance in the most consequential technology competition of this century.

The following sections detail three policy pillars:

- Pillar I proposes promoting innovation and competition across all layers of the American AI stack through a modular, ecosystem-focused approach that reflects how the market actually operates.

- Pillar II recommends anchoring exports within American operational oversight during deployment, enabling the speed and predictability that commercial deployments require.

- Pillar III outlines how to create governance structures built for the pace of AI innovation while establishing the American AI Stack as the global standard that others align with.

Together, these recommendations provide a framework for translating America's current AI leadership into sustained global influence by enabling the competitive, innovative, and interoperable ecosystem that is already the source of American technological advantage.

Pillar I: Enabling Market-Driven Participation and Competition

The Commerce Department's RFI on consortium structure demonstrates the Administration's thoughtful approach to these questions. Rather than dictating organizational forms, the Commerce Department is seeking industry input on how private-sector-led consortia can best serve national security objectives while preserving competitive dynamics. This consultative approach recognizes that, given their unique perspectives and hands-on experience navigating the complexities of the AI stack, companies may have a better grasp of integration requirements than regulators, and that overly rigid structures could unintentionally constrain the innovation speed that gives American AI its competitive edge.

Our view, detailed below, is that the program should establish clear security and interoperability standards while allowing certified providers maximum flexibility in how they collaborate. Some deployments will require formal consortium structures with designated leadership; others will succeed through arm's-length marketplace transactions. The common thread should be certification rigor, not organizational prescription.

Policy Opportunities

- Pre-Certification of Stack Providers — Establish a rolling certification process for U.S. companies and trusted allied partners that meet defined compliance, security, and interoperability standards. Certification should be component-specific rather than requiring full-stack offerings, allowing specialized providers to participate and new entrants to join certified rosters quarterly. This enables partner nations to select certified components that fit their specific deployment requirements while maintaining rigorous security oversight.

- Flexible Consortium Formation — Allow certified providers to form project-specific consortia without pre-approval when integration requirements demand it, provided the consortium lead is a certified U.S. entity and all members are pre-certified. Consortia register their formation with Commerce but operate under private-sector leadership, preserving the industry-led model while maintaining export control visibility.

- Anti-Concentration Safeguards — Prevent market lock-in by prohibiting exclusivity requirements in certification, allowing providers to participate in multiple consortia or operate independently, and conducting periodic market access reviews. Create expedited certification pathways for small and medium enterprises to ensure the program preserves the competitive diversity that drives American AI innovation.

- Allied Partner Integration — Allow certified foreign entities from trusted jurisdictions to participate where U.S. industrial depth is limited, provided U.S. entities lead any consortium including foreign participants, foreign participants have no ties to countries of concern, and all comply with U.S. re-export controls.

Pillar II: American Operational Oversight and Lifecycle Accountability

Once certified providers are competing in a dynamic marketplace, the question becomes: how do we maintain security and compliance as these systems deploy globally? The answer lies in operational oversight.

Tracking where advanced hardware ends up provides necessary but insufficient assurance. Real security comes from maintaining American oversight – or that of trusted American partners – throughout each system's operational lifecycle: who manages the data, maintains the infrastructure, and controls access once deployed. Without continuous visibility and accountability, even U.S.-built technology can be repurposed in ways that undermine national security objectives.

American operational oversight provides the compliance verification, security assurance, and risk management that both U.S. national security and allied confidence require. Below are opportunities to embed supervision standards into the export framework, while enabling the commercial deployment speed that market competition demands.

Policy Opportunities

- Trusted Supervisor Standard — Require U.S. supervision as baseline eligibility for export program participation. These entities must be U.S.-headquartered entities subject to U.S. jurisdiction, with the U.S. entity retaining supervision over system configuration, access controls, maintenance protocols, and security updates throughout the deployment lifecycle. This ensures exported systems remain under American supervision with clear accountability chains while allowing operational structures that meet commercial requirements.

- Standardized Lifecycle Attestations — Replace case-by-case export documentation with standardized attestation templates covering ownership structure, operational control, compliance posture, and end-use commitments. Attestations signed by C-level officers maintain transparency while reducing proprietary information disclosure and administrative burden versus detailed technical documentation for each export transaction.

- Data Center Security Standards — Ensure facilities hosting program-exported systems, regardless of location, meet all applicable cybersecurity and physical access standards. For infrastructure partners that build, own, or operate data centers but are not traditional technology companies, require transparent ownership disclosure, designation of a certified U.S. technology partner with operational oversight responsibility, and compliance with the equivalent security standards applied to technology providers.

- Continuous Compliance Verification — Shift from one-time export approvals to ongoing compliance verification through annual attestation renewal, mandatory incident reporting, retained BIS audit authority, and clear processes for swift suspension of export authorizations if compliance failures emerge. This approach provides operators with deployment certainty while ensuring the government maintains robust oversight throughout system lifecycles.

Pillar III: Export Governance at the Pace of Innovation

A marketplace of certified providers maintaining operational oversight addresses who can export and how systems should be managed. The final question is how quickly American AI can reach allies before competitors fill the gap.

In AI, speed is strategic. Hardware architectures, model optimizations, and orchestration capabilities now update on cycles measured in weeks, not years. Export processes designed for slower-moving industries—where products remain current for years—create mismatches when applied to AI, where a six-month licensing delay can mean exporting yesterday's technology into tomorrow's markets.

But speed without standards creates fragmentation. The real strategic opportunity lies in pairing rapid deployment with predictable governance frameworks already recognized within the U.S. export regime. By grounding systems in interoperability protocols that are tested, transparent, and widely supported, the U.S. can deliver AI capabilities quickly while ensuring international partners can integrate them smoothly. In doing so, we do more than compete in individual export markets—we help shape the long-term architecture of allied AI ecosystems for decades to come.

The recommendations below outline how to structure export governance that matches the pace of AI innovation while embedding American standards as the framework others align with.

Policy Opportunities

- Conditional Approval with Continuous Monitoring — Enable qualified exporters to deploy systems under conditional export authorizations, with compliance verified through ongoing monitoring rather than pre-deployment review of every configuration change. Companies meeting defined security and compliance standards receive pre-clearance to export certified system configurations to approved destinations, with systems shipping immediately and minor updates proceeding without additional licensing review. BIS retains authority for rapid revocation upon evidence of non-compliance, balancing deployment speed with robust oversight.

- Inference-Priority Licensing — Create an expedited review track for inference-only systems, recognizing their lower proliferation risk compared to training infrastructure. Systems designed and deployed exclusively for running pre-trained models, with technical controls preventing large-scale training use, receive accelerated licensing decisions to approved destinations. The vast majority of global AI deployment is inference, not training; recognizing this distinction allows the U.S. to maintain appropriate controls on highest-risk systems while enabling American companies to compete effectively in the rapidly expanding inference market.

- Standardized Documentation Templates — Provide consistent attestation and technical documentation templates across export applications to streamline agency review and reduce compliance burden. Templates for ownership structure, technical specifications, security protocols, and end-use commitments should be developed collaboratively with industry to capture necessary information while minimizing duplicative requests across applications and protecting proprietary details.

- U.S. Standards as Global Infrastructure — Use the export program to establish American technical standards as the default for AI infrastructure internationally. Condition program participation on adherence to open, documented technical interfaces enabling mix-and-match deployment of certified components. Establish standard metrics for inference performance and energy efficiency that become reference points for global procurement decisions. Coordinate U.S. industry representation in international standards bodies to ensure American practices shape emerging global norms, creating path dependencies that extend U.S. influence far beyond individual export transactions.

Conclusion

The race for global AI leadership will not be won by any single company, model, or breakthrough. It will be won by the nation that builds the most effective system for translating innovation into trusted, deployed infrastructure at global scale. The United States possesses the raw materials for victory: the most innovative technology companies, the most advanced research ecosystem, the strongest network of allied partnerships, and the most dynamic competitive markets. But these advantages mean nothing if American AI cannot reach allies faster, operate more reliably, or integrate more seamlessly than alternatives from countries of concern. The American AI Export Program represents an opportunity to convert current technological leadership into sustained strategic advantage.

About Groq

Groq builds and operates the inference infrastructure that powers real-time AI with the speed and cost it requires. Founded in 2016, Groq created the LPU and GroqCloud to run advanced models faster and more affordably. Groq designs its own hardware, owns the full software stack, and operates the inference platform that serves more than 2.8 million developers and leading Fortune 500 enterprises worldwide. Groq is a core part of the American AI Stack and works with partners globally to deploy and operate large-scale inference clusters.