10/21/2024

The Crucial Role of Context Length in Large Language Models for Business Applications

What is Context Length?

As businesses look to leverage Large Language Models (LLMs) for conversational AI, generative AI, and analytics, a crucial factor often gets overlooked: context length, also known as context window. The length of input text that an LLM can process significantly affects not only its performance and quality, but also the types of solutions and user experiences it can support. In this post, we'll explore the importance of LLM context length and provide practical guidance on selecting the optimal context length for your business application, ensuring you get the most out of your AI investment.

Context length refers to the maximum number of tokens (words, characters, or subwords) that an LLM can process in a single input. This limit is typically determined by the model's architecture, training data, and computational resources. For example, popular LLMs range in size from GPT-1 at 512 tokens, to the Llama models going from Llama 2 at 4,096 to Llama 3 at 8,192 all the way to 3.1 at 128,000.

In an interview with Lex Friedman on the future of LLMs, Sam Altman, CEO of OpenAI commented, "If we dream into the distant future... we'll have context length of several billion. You will feed in all of your information, all your history over time, and it will just get to know you better and better."

https://www.youtube.com/watch?v=pJ3aygJnc9M

Context Length Impact on LLM Performance & Quality

Context length has a significant impact on an LLM's performance, particularly in business applications where accuracy and relevance are required. A longer context length generally allows for higher quality outputs, while a shorter length leads to faster performance.

Here are some ways in which context length can affect your LLM-powered application:

- Understanding the Question: Longer context lengths means users can input longer commands, giving the LLMs the opportunity to build a more nuanced understanding of the prompt’s words, phrases, and ideas. This leads to better understanding and accuracy in tasks like conversational AI, text summarization, and sentiment analysis. Additionally, longer context lengths can also benefit coding tasks, such as code completion and debugging, by allowing the LLM to understand the broader context of the code and provide more accurate and relevant suggestions.

- More Context & Iteration: Model inputs can include not just the user’s query, but also additional information to give the model more context. That could include the entire conversation with the user up to that point, or additional content that may be useful or necessary. For example, if a user wants the model to help rewrite an article or essay, the input would not only include the request, but also the original draft. This is particularly important in applications like chatbots, where understanding the entire conversation and additional relevant user information is crucial. This additional context also allows an LLM to improve its response through iteration, which may lead to the user seeing higher quality outputs.

- Efficiency & Scalability: Shorter context lengths can lead to faster processing times and lower computational costs. However, this may come at the cost of reduced accuracy and relevance.

While some providers will tout their speed, they are not always immediately transparent on context length, a crucial parameter to understand when choosing both a model and a provider to power your applications. Where others shortcut context length to compete on speed, Groq offers some models at various context lengths so you can optimize for your use case, all of which are clearly shared on our pricing page.

When to Use Short Context Length

Some AI applications work fine with short context length. For example, a sentiment analysis solution that processes short inputs such as product review blurbs or social media posts and comments may only need 256 tokens to do the job. The same with chatbots that only allow a limited set of user inputs. In these cases, a developer may choose to limit context length in order to optimize inference speed and efficiency.

Here are some implications to consider for shorter context lengths:

- More explicit instructions: System prompts (the instructions given to a model that guide how it responds to a user’s query) need to be more concise and focused as to provide clear and explicit instructions to the LLM, as the model has limited context to understand the task. A reminder that system prompts add tokens that count towards the model’s content length.

- Fewer assumptions: System prompts should make fewer assumptions about the user's intent or context, as the LLM may not have enough information to infer the correct meaning.

- More specific keywords: System prompts should include specific keywords or phrases to help the LLM understand the task and generate relevant responses.

- Less contextualization: System prompts may not be able to rely on contextual information, such as previous conversations or user history, to inform the LLM's response.

When Longer Context Length Is Necessary

Many AI solutions require longer context lengths in order to deliver high quality outputs. For example, any solution delivering long form content (articles, blog posts, research papers) requires a lot of input, as this helps create context, in order to create a high quality output. Solutions that involve back and forth dialogue with the user, where a question builds on previous outputs, can quickly develop inputs, also requiring longer context length.

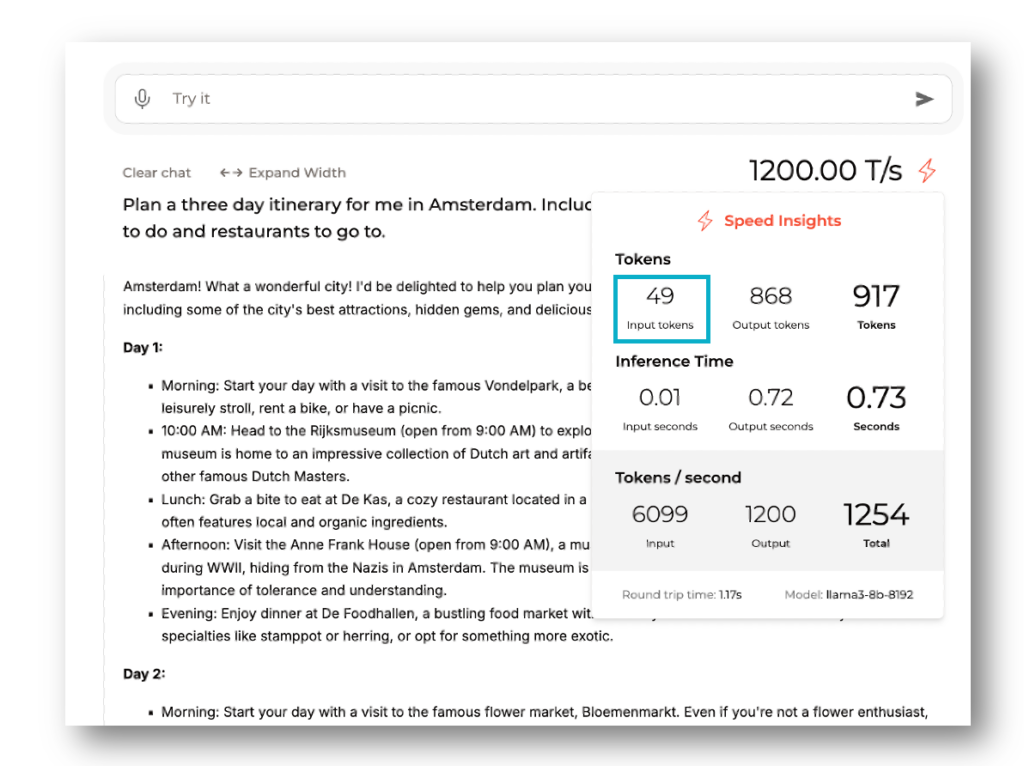

To demonstrate this we turn to GroqChat. Let’s say you are heading to a distant city – we’ll choose Amsterdam. You ask GroqChat: “Plan a three day itinerary for me in Amsterdam. Include specific recommendations on things to do and restaurants to go to.” GroqChat instantly (1200 tokens / second using Llama 3-8b with 8196 context length) produces an itinerary. Roll your mouse over the 1200 speed metric and you’ll see that the input was only 49 tokens.

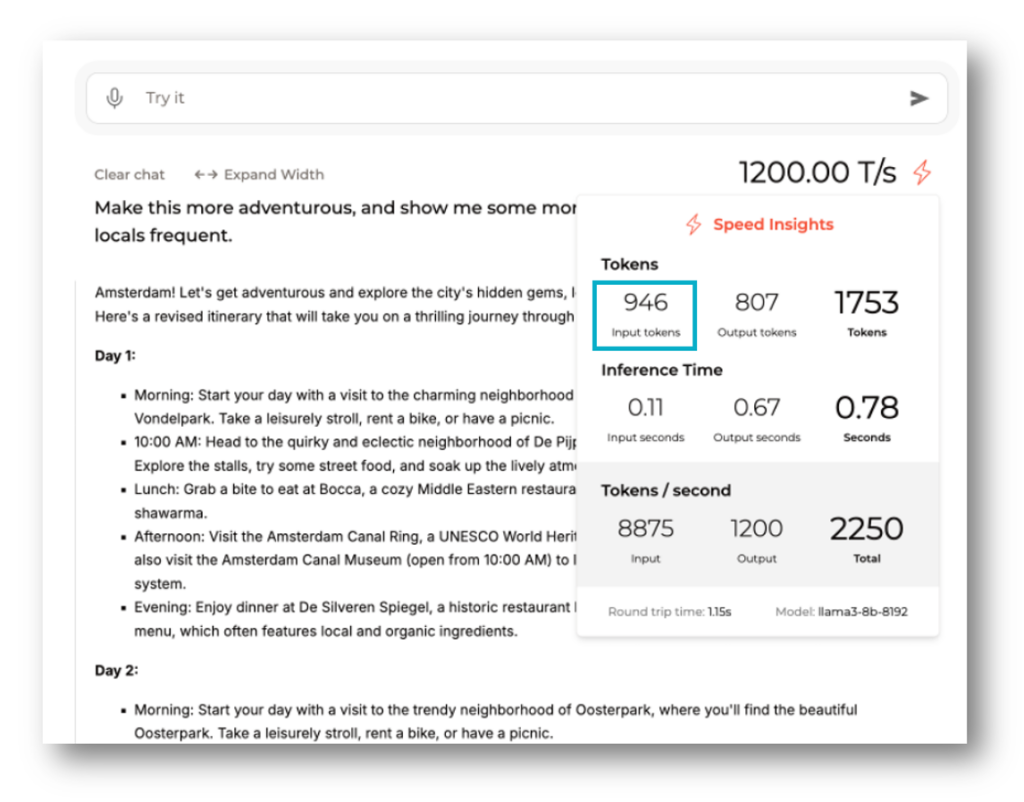

But then you want to iterate on this query. Ask it: “Make this more adventurous, and show me some more out of the way restaurants that the locals frequent.” The response comes back instantly, but now when you mouse over the speed metric you see that the input is 946 tokens (or thereabouts), because the input string included the entire output of the previous query. Do this a few times and you can see how pretty soon we’ll exceed the model’s 8196 token context limit.

Similarly, certain domains, like healthcare or finance, may require longer context lengths to capture complex relationships and nuances in the input text. Inputs may include large amounts of client data. Including such data in the input means the model can include that context in its response but doesn’t store it, which can be important for privacy purposes (e.g. HIPAA compliance).

With longer context lengths, system prompts can be more nuanced and context-dependent. Users can provide additional background information in the system prompt to inform the model of its "persona" as well as add instructions as to when to call a particular tool (agent). Here are some implications to consider for longer context lengths:

- Less explicit instructions: System prompts can provide less explicit instructions, relying on the LLM's ability to understand the context from the previous messages in the conversation and generate relevant responses.

- More assumptions: Since they can include more context, longer system prompts can make more assumptions about the user's intent or context, as the LLM has more information to infer the correct meaning.

- Fewer specific keywords: System prompts may not need to include specific keywords or phrases, as the LLM can understand the context and generate relevant responses.

Potential Uses Cases for Longer Context Length

Multi-agent Solutions

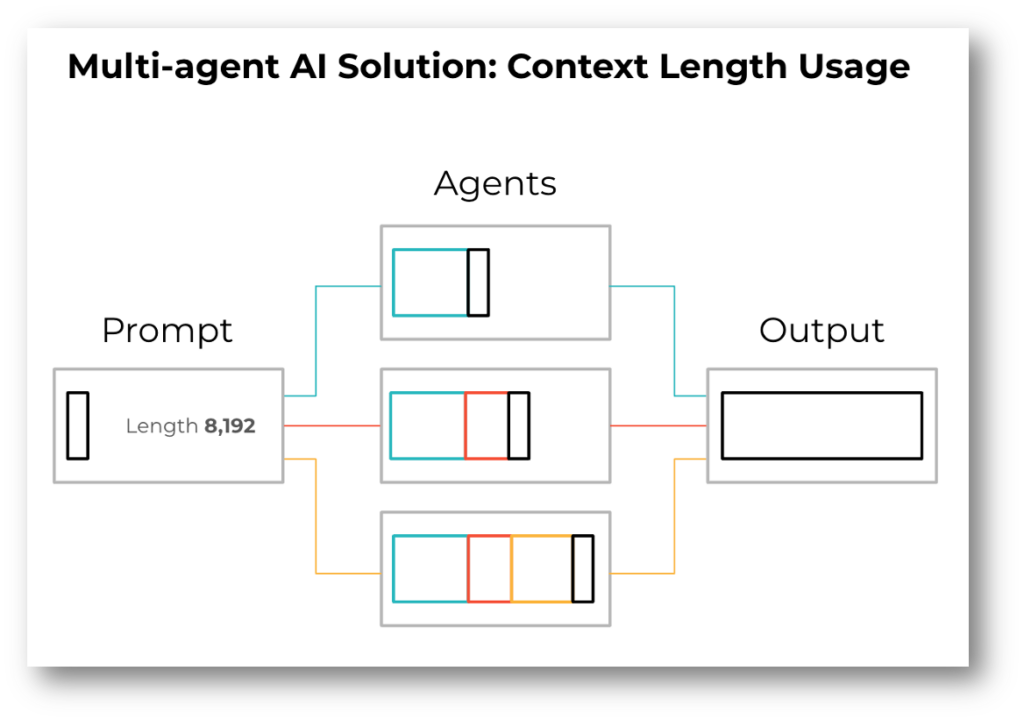

Businesses and developers creating more complex AI solutions often employ a multi-agent approach to develop their solutions. These programs employ multiple models, often task-specific, operating in parallel or serially. As these models process their particular tasks, they pass their outputs to the next model as inputs, so input lengths can get quite long. When considering context length requirements, business technologists and developers need to consider if they plan on using a multi-agent AI solution.

Retrieval Augmented Generation

LLMs can be enhanced with a framework called Retrieval Augmented Generation (RAG) where the power of LLM text generation is combined with a smaller, more specialized model to retrieve relevant information from a database or knowledge graph. The retrieved information is then used to augment the generated text. With longer context windows, RAG models can better understand the topic, entities, and relationships involved, leading to more accurate and relevant responses. Longer contexts also enable the model to capture subtle nuances and details, understand ambiguous terms, and generate supporting evidence and examples. This can mean more coherent, fluent, and persuasive responses that better handle complex topics and follow-up questions. Long enough context lengths can even make RAG unnecessary – if the context window is sufficiently large and the document(s) is short enough, everything could fit directly into the context window.

Code-based Solutions

Given longer context windows, code-based solutions can analyze and process sequential data, such as text or time series data, that spans a long period of time. These solutions can capture nuanced patterns and relationships in the data, improving accuracy and decision-making. This is particularly valuable in applications such as language modeling, where a longer context window can enable more accurate predictions and more natural language generation. Code-based solutions can also process large amounts of data quickly and efficiently, making real-time analysis and processing possible for applications like predictive maintenance, demand forecasting, and anomaly detection. Additionally, these solutions can be easily adapted to different use cases and domains, making them a strong option for developers and organizations seeking to derive insights and value from their data.

Questions to Ask

Context length is a key factor to consider when comparing and selecting which models to use for an AI solution. Shorter context length models generally perform faster but can deliver lower quality, especially for more complex tasks, while longer context models generate higher quality outputs but may have lower performance.

Questions business technologists should ask:

- What are the use cases and user experience requirements for my solution? Will my users be ok with the constraints of shorter context lengths?

- How complex is my solution? Does the model need more context provided in the input stream (e.g. user data, source material, cumulative conversation) in order to provide good results?

- Will developers be using multiple agents and models to create the solution?

Best Practices for Working with Context Length

To get the most out of your LLM-powered application, follow these best practices:

- Experiment with Different Context Lengths: Test your application with different context lengths to determine the optimal length for your specific use case.

- Use Context Length as a Hyperparameter: Treat context length as a hyperparameter that can be tuned and optimized for your specific application.

- Consider Using Hierarchical or Multi-resolution Models: These models can process input texts at multiple resolutions, allowing for more efficient and effective processing of long input texts.

- Monitor Performance and Adjust: Continuously monitor your application's performance and adjust the context length as needed to ensure optimal results.

- Explore LLM-enhancing Tools: Certian tools can help optimize your chosen context length, such as the Toolhouse Semantic Memory Search tool, which equips LLMs with the ability to retrieve past conversation without the need to use additional prompting.

Conclusion

Context length is a critical consideration when building LLM-powered applications for business. By understanding the impact of context length on LLM performance and choosing the right context length for your specific use case, you can unlock the full potential of your application. Remember to experiment with different context lengths, use context length as a hyperparameter, and consider using hierarchical or multi-resolution models to optimize your application's performance. With the right context length, you can build more accurate, relevant, and effective LLM-powered applications that drive business success.