GroqCloud™ Adds Qwen-2.5-32b & DeepSeek r1 Support

One of the things we love most about our community of almost one million GroqCloud™ developers is hearing what they want next – this next model drop is one of the top requested additions. That’s right, Qwen-2.5-32b and DeepSeek-r1-distill-qwen-32b are now live on GroqCloud! Qwen-2.5-32b has a context window of 128k while DeepSeek-r1-distill-qwen-32b has a context window of 128k. Groq has enabled the full context window for both of these models on GroqCloud. Note the max completion tokens is 8k for Qwen-2.5-32b and 16k for DeepSeek-r1-distill-qwen-32b. You can explore our pricing information here.

Performance

Qwen-2.5-32b is currently running at ~397 t/s and DeepSeek-r1-distill-qwen-32b is currently running at 388 t/s on GroqCloud. Stay tuned for official 3rd party benchmarks from Artificial Analysis. Plus, both models support tool calling and JSON mode on GroqCloud.

Comparisons

Compared to Qwen2, Qwen2.5 offers:

- Increased knowledge plus boosts for coding and mathematics

- Big improvements in instruction following, understanding structured data (e.g, tables), and generating structured outputs especially JSON

- More resiliency to diverse system prompts, enhancing role-play implementation and condition-setting for chatbots

- Long-context support up to 128K tokens and can generate up to 8K tokens

DeepSeek-r1-distill-qwen-32b delivers very competitive performance on CodeForces with a rating of 1691, the best among all r1 distilled models and second only to o1-mini (1820). It as well achieves strong scores on AIME 2024 (83.3%), MATH-500 (94.3%), GPQA Diamond (62.1%), and LiveCodeBench (57.2%) falling just behind DeepSeek-R1-Distill-Llama-70b of the r1 distill models while outperforming OpenAI’s o1 mini and gpt-4o – making it a top choice for advanced mathematical reasoning and coding tasks.

Security on GroqCloud

When using GroqCloud to access Qwen-2.5-32b and DeepSeek-r1-distill-qwen-32b models, you're utilizing Groq US-based infrastructure and services for inference. These models are deployed on Groq hardware in our datacenters. Groq doesn’t train models and user data (inputs/outputs) are only temporarily stored in memory for the duration of your session.

Note that your prompts for Qwen-2.5-32b and DeepSeek-r1-distill-qwen-32b are handled the same way we handle prompts for all other models you make requests to. We take privacy seriously. See our Trust Center and terms of use, terms of sale, and privacy policy for more information.

For information regarding the model itself, please see the Model Card and license terms published by Qwen on GitHub, Hugging face and other repositories.

- Qwen-2.5-32b: Model Card and license terms

- Deepseek-r1-distill-qwen-32b: Model Card and license terms

Ready to Build?

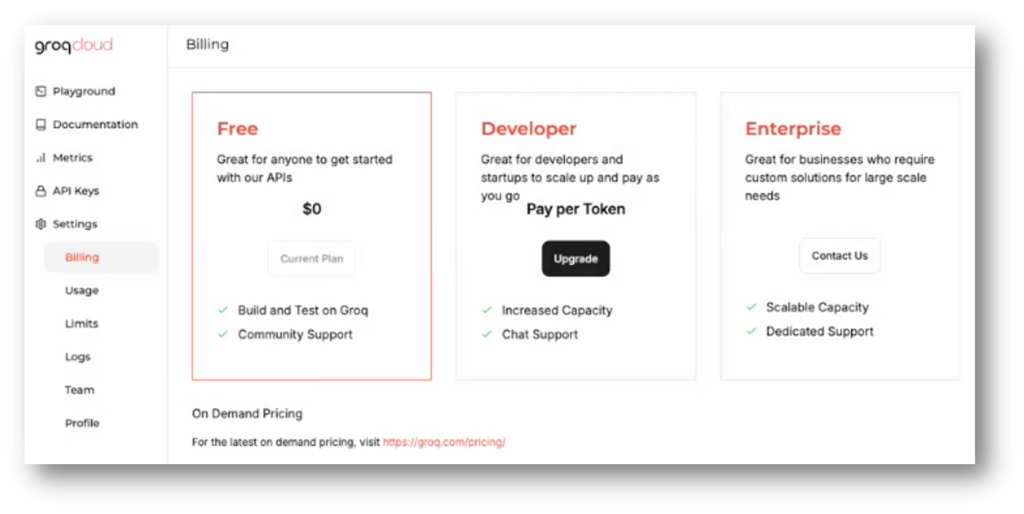

We’ve expanded access to GroqCloud™, ramping up our Developer Tier which is a self-serve access point (or upgrade if you’ve been using our Free Tier up until now). It’s easy – now anyone with a credit card can sign up for our Developer Tier to pay-as-you-go, getting on-demand access to GroqCloud™.

If you’re looking to take your access to the next level, our enterprise solutions are perfect for custom solutions and large scale needs requiring enterprise-grade capacity and dedicated support. Reach out to our team here about Enterprise access.