Llama 3.3 70B – New Scaling Paradigm in AI

Model Offers Comparable Quality to 3.1 405B at Less Than 1/5th the Size

Quality, Not Just Quantity

Today, Meta released Llama 3.3 70B and it’s a game-changer. By leveraging new post-training techniques, they've managed to improve performance across the board, reaching state-of-the-art in areas like reasoning, math, and general knowledge. What's impressive is that this model can deliver results similar in quality to the larger 3.1 405B model, but at a fraction of the cost. That's a huge win for developers who want high-quality text-based applications without breaking the bank.

What’s even more impressive is how Meta is challenging the “death of scaling law.” Yes, it was a useful myth that helped us understand the early days of LLMs. But the reality is that Meta is defying what were thought to be the traditional scaling limits. They're not just throwing more parameters at the problem – they're actually improving the underlying models without changing the fundamental architecture of the model. We’re in a new law-defying time with AI innovation where the focus is on quality, not just quantity, a 2025 prediction from Groq CEO and Founder Jonathan Ross.

At Groq, we're excited to be part of this new era, working with Meta and others to unlock the full potential of AI through our open ecosystem. Here’s our launch plan for offering this model via GroqCloud™ Developer Console:

llama-3.1-70b-specdecis now updated tollama-3.3-70b-specdec. Starting todayllama-3.1-70b-specdecwill alias tollama-3.3-70b-specdec- Users can now test the new model,

llama-3.3-70b-versatile - Groq will maintain the Llama 3.1 70B model,

llama-3.1-70b-versatile, for the next two weeks as a user transition period - You can explore the GroqCloud deprecation page for more information

What This Means for Developers

The new Llama 3.3 70B model performs better at coding, reasoning, math, general knowledge tasks, instruction following, and tool use. Users can now:

- Get JSON outputs for function calling

- Get step by step chain of thought reasoning outputs with more accurate responses

- Get greater coverage of top programming languages, with greater accuracy

- Expect improved code feedback that helps identify and fix issues, as well as improved error handling

- Invoke tools while reliably respecting default and specified parameters

- Expect task-aware tool calls that avoid calling a tool where none is useful

We've tested Llama 3.3 70B and found that its quality scores are surprisingly close to the larger Llama 3.1 405B model. That's right, a model that's 1/5th the size of 405B, but with similar quality. This is a huge deal for developers who've had to choose between speed and quality in the past. With Llama 3.3 70B, they can have both. And since this model is already the most popular choice for our GroqCloud developers, this update is a major win for anyone building text-based applications. Stay tuned for independent 3rd party benchmarks – those are coming next.

The Llama Family Tree

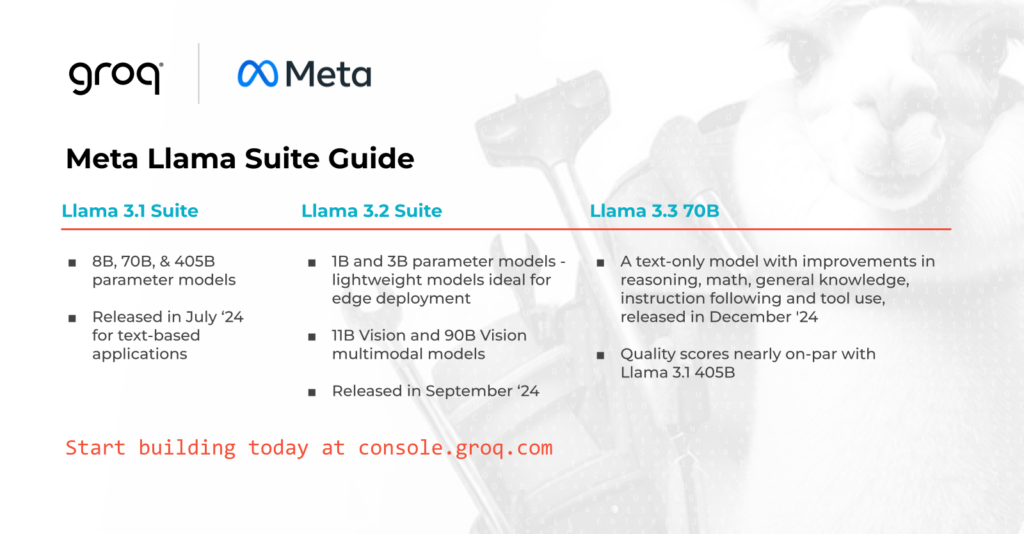

As the Llama model family continues to grow, don’t feel bad if your head is spinning. Here’s a quick overview of how each of the models in the Llama suite serves end users.

- Llama 3.1 Suite: Includes 8B, 70B, and 405B parameter models and was originally released in July 2024 for text-based applications

- Llama 3.2 Suite: Includes lightweight models ideal for edge deployment in 1B and 3B parameter sizes, plus multimodal models, 11B Vision and 90B Vision, and was originally released in September 2024

- Llama 3.3 70B: A text-only model with improvements in reasoning, math, general knowledge, instruction following and tool use – check out the model card for the Meta quality benchmarks

Groq and Meta are aligned that openly available models, from the Llama model family and beyond, drive innovation and are the right path forward. That’s why Groq is committed to continuing to host the latest models, working with our partners in the AI ecosystem, and serving our GroqCloud community of over 650,000 developers. We think that by making these models available, and making them fast, we can accelerate progress and create new possibilities for developers and enterprises alike.