API Access

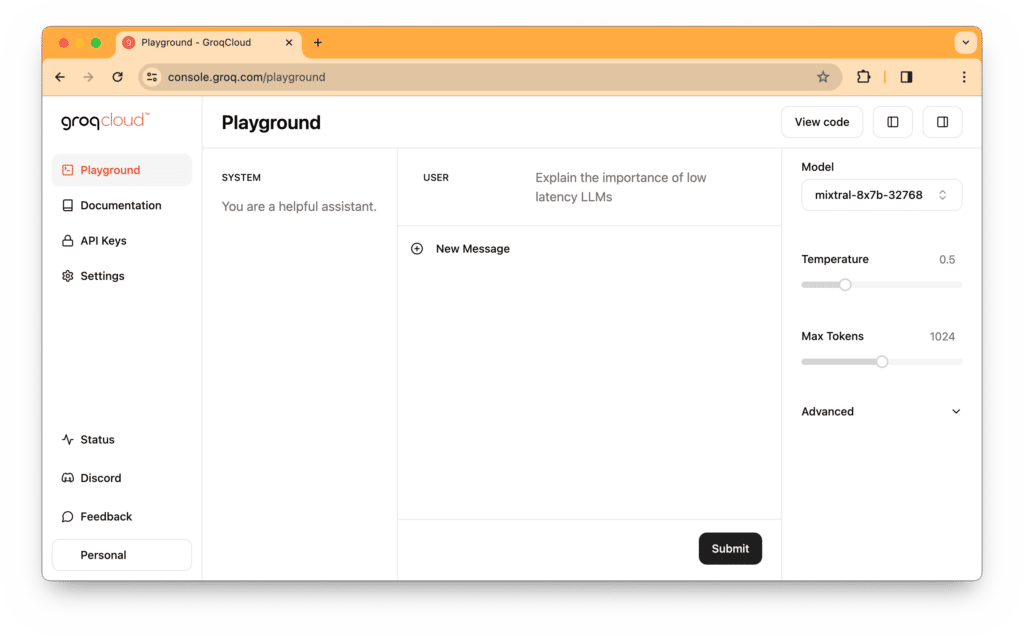

GroqCloud™ has multiple levels of API access.

Developer access can be obtained completely self-serve through Playground on GroqCloud. There you can obtain your API key and access our documentation as well as our terms and conditions on Playground. Join our Discord community here.

If you are currently using OpenAI API, you just need three things to convert over to Groq:

- Groq API key

- Endpoint

- Model

Do you need the fastest inference at data center scale? We should chat if you need:

- Custom rate limits

- Fine-tuned models

- Custom SLAs

- Dedicated support

Let’s talk to ensure we can provide the right solution for your needs. Please fill out this form and tell us a little about your project. After submitting, a Groqster will be in touch with you shortly.

Price

Other models, such as Mistral and CodeLlama, are available for specific customer requests. Send us your inquiries here.

| Model | Current Speed | Price per 1M Tokens (Input/Output) |

|---|---|---|

| Llama 2 70B (4096 Context Length) | ~300 tokens/s | $0.70/$0.80 |

| Llama 2 7B (2048 Context Length) | ~750 tokens/s | $0.10/$0.10 |

| Mixtral, 8x7B SMoE (32K Context Length) | ~480 tokens/s | $0.27/$0.27 |

| Gemma 7B (8K Context Length) | ~820 tokens/s | $0.10/$0.10 |

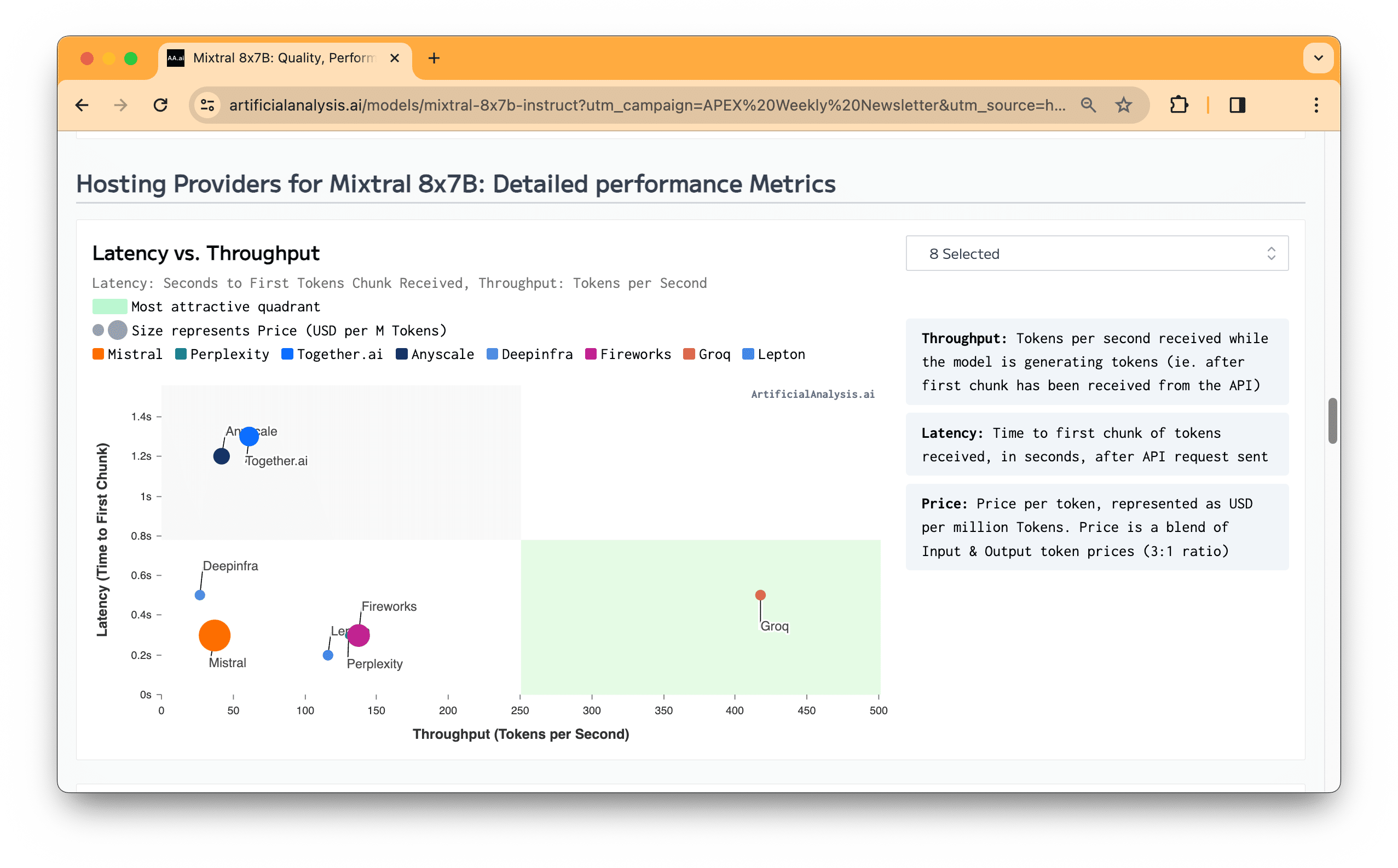

Fastest Inference, Period.

In this public benchmark, Mistral.ai’s Mixtral 8x7B Instruct running on the Groq LPU™ Inference Engine outperformed all other cloud-based inference providers at up to 15x faster output tokens throughput.